通过 S3 CSI 对接 Minio

目录

参考文档

https://github.com/ctrox/csi-s3

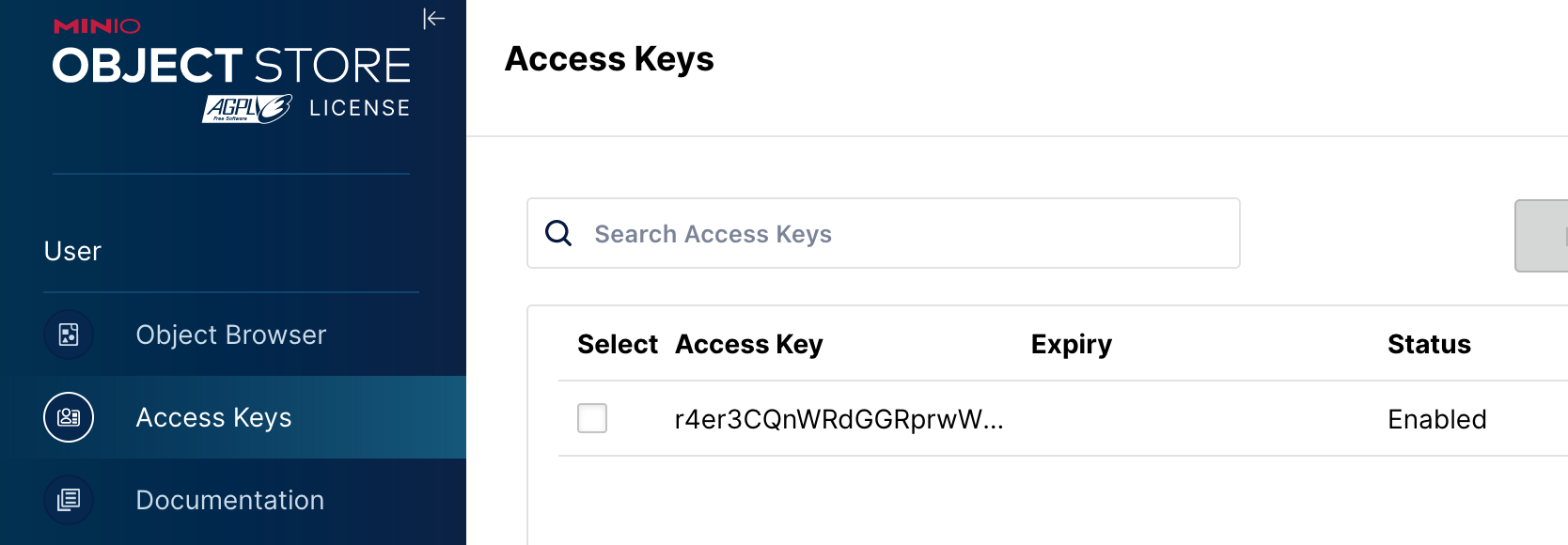

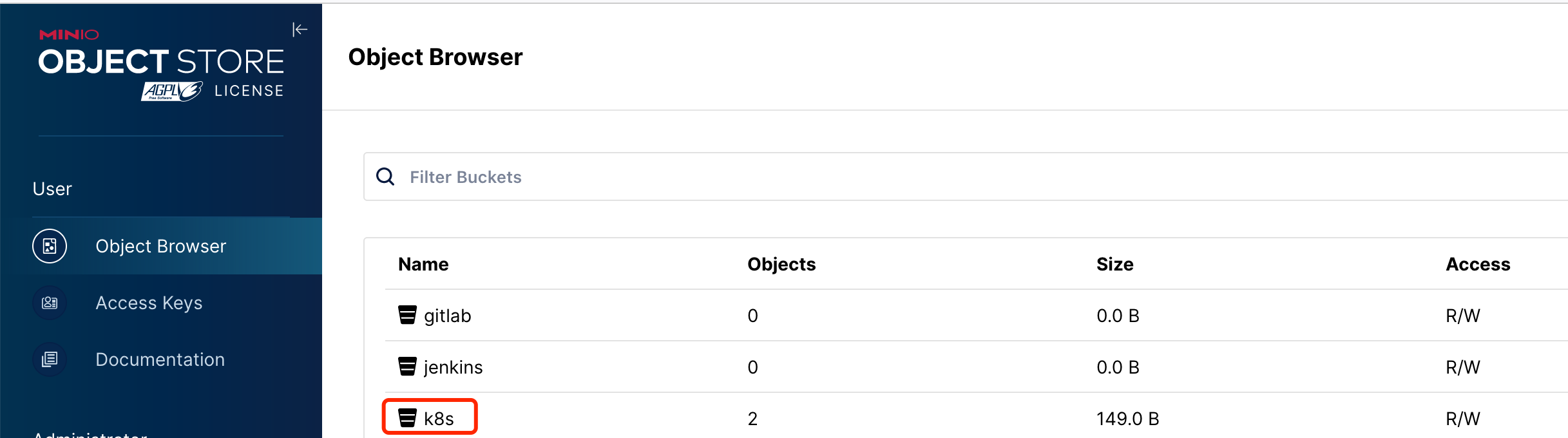

Minio 配置

minio 上添加好了 access key 和 bucket:

一键 YAML 部署参考

cat>s3-csi.yaml<<'EOF'

apiVersion: v1

kind: Secret

metadata:

namespace: kube-system

name: csi-s3-secret

namespace: kube-system

stringData:

accessKeyID: r4er3CQnWRdGGRprwWkh

secretAccessKey: dfrI1zbWJxJhktfUlDlTv8h4bz1YyxDHRA0glS8K

endpoint: http://10.10.50.16:9000

# 对于 Minio,region 需要留空,设置为 "" 即可

region: ""

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: csi-provisioner-sa

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: external-provisioner-runner

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csi-provisioner-role

subjects:

- kind: ServiceAccount

name: csi-provisioner-sa

namespace: kube-system

roleRef:

kind: ClusterRole

name: external-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Service

apiVersion: v1

metadata:

name: csi-provisioner-s3

namespace: kube-system

labels:

app: csi-provisioner-s3

spec:

selector:

app: csi-provisioner-s3

ports:

- name: csi-s3-dummy

port: 65535

---

kind: StatefulSet

apiVersion: apps/v1

metadata:

name: csi-provisioner-s3

namespace: kube-system

spec:

serviceName: "csi-provisioner-s3"

replicas: 1

selector:

matchLabels:

app: csi-provisioner-s3

template:

metadata:

labels:

app: csi-provisioner-s3

spec:

serviceAccount: csi-provisioner-sa

tolerations:

- key: node-role.kubernetes.io/master

operator: "Exists"

containers:

- name: csi-provisioner

image: quay.io/k8scsi/csi-provisioner:v2.1.0

args:

- "--csi-address=$(ADDRESS)"

- "--v=4"

env:

- name: ADDRESS

value: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver/csi.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver

- name: csi-s3

image: ctrox/csi-s3:v1.2.0-rc.2

args:

- "--endpoint=$(CSI_ENDPOINT)"

- "--nodeid=$(NODE_ID)"

- "--v=4"

env:

- name: CSI_ENDPOINT

value: unix:///var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver/csi.sock

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

imagePullPolicy: "Always"

volumeMounts:

- name: socket-dir

mountPath: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver

volumes:

- name: socket-dir

emptyDir: {}

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: csi-attacher-sa

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: external-attacher-runner

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["events"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments", "volumeattachments/status", "storageclass"]

verbs: ["get", "list", "watch", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csi-attacher-role

subjects:

- kind: ServiceAccount

name: csi-attacher-sa

namespace: kube-system

roleRef:

kind: ClusterRole

name: external-attacher-runner

apiGroup: rbac.authorization.k8s.io

---

# needed for StatefulSet

kind: Service

apiVersion: v1

metadata:

name: csi-attacher-s3

namespace: kube-system

labels:

app: csi-attacher-s3

spec:

selector:

app: csi-attacher-s3

ports:

- name: csi-s3-dummy

port: 65535

---

kind: StatefulSet

apiVersion: apps/v1

metadata:

name: csi-attacher-s3

namespace: kube-system

spec:

serviceName: "csi-attacher-s3"

replicas: 1

selector:

matchLabels:

app: csi-attacher-s3

template:

metadata:

labels:

app: csi-attacher-s3

spec:

serviceAccount: csi-attacher-sa

tolerations:

- key: node-role.kubernetes.io/master

operator: "Exists"

containers:

- name: csi-attacher

image: quay.io/k8scsi/csi-attacher:v3.1.0

args:

- "--v=4"

- "--csi-address=$(ADDRESS)"

env:

- name: ADDRESS

value: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver/csi.sock

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: socket-dir

mountPath: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver

volumes:

- name: socket-dir

hostPath:

path: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver

type: DirectoryOrCreate

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: csi-s3

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csi-s3

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "update"]

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["volumeattachments", "volumeattachments/status", "storageclass"]

verbs: ["get", "list", "watch", "update"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csi-s3

subjects:

- kind: ServiceAccount

name: csi-s3

namespace: kube-system

roleRef:

kind: ClusterRole

name: csi-s3

apiGroup: rbac.authorization.k8s.io

---

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: csi-s3

namespace: kube-system

spec:

selector:

matchLabels:

app: csi-s3

template:

metadata:

labels:

app: csi-s3

spec:

serviceAccount: csi-s3

hostNetwork: true

containers:

- name: driver-registrar

image: quay.io/k8scsi/csi-node-driver-registrar:v1.2.0

args:

- "--kubelet-registration-path=$(DRIVER_REG_SOCK_PATH)"

- "--v=4"

- "--csi-address=$(ADDRESS)"

env:

- name: ADDRESS

value: /csi/csi.sock

- name: DRIVER_REG_SOCK_PATH

value: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver/csi.sock

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: plugin-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration/

- name: csi-s3

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

image: ctrox/csi-s3:v1.2.0-rc.2

imagePullPolicy: "Always"

args:

- "--endpoint=$(CSI_ENDPOINT)"

- "--nodeid=$(NODE_ID)"

- "--v=4"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: NODE_ID

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: plugin-dir

mountPath: /csi

- name: pods-mount-dir

mountPath: /var/lib/kubelet/pods

mountPropagation: "Bidirectional"

- name: fuse-device

mountPath: /dev/fuse

volumes:

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: DirectoryOrCreate

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins/ch.ctrox.csi.s3-driver

type: DirectoryOrCreate

- name: pods-mount-dir

hostPath:

path: /var/lib/kubelet/pods

type: Directory

- name: fuse-device

hostPath:

path: /dev/fuse

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: csi-s3

provisioner: ch.ctrox.csi.s3-driver

parameters:

# specify which mounter to use

# can be set to rclone, s3fs, goofys or s3backer

mounter: s3fs

# 默认 csi 针对每个 volume 都会创建一个 bucket。也可以预先在 minio 上创建好 bucket,然后在下面指定

bucket: k8s

csi.storage.k8s.io/provisioner-secret-name: csi-s3-secret

csi.storage.k8s.io/provisioner-secret-namespace: kube-system

csi.storage.k8s.io/controller-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/controller-publish-secret-namespace: kube-system

csi.storage.k8s.io/node-stage-secret-name: csi-s3-secret

csi.storage.k8s.io/node-stage-secret-namespace: kube-system

csi.storage.k8s.io/node-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/node-publish-secret-namespace: kube-system

EOF

kubectl apply -f s3-csi.yaml

细节部署

准备下列 secret 配置:

apiVersion: v1

kind: Secret

metadata:

namespace: kube-system

name: csi-s3-secret

namespace: kube-system

stringData:

accessKeyID: r4er3CQnWRdGGRprwWkh

secretAccessKey: dfrI1zbWJxJhktfUlDlTv8h4bz1YyxDHRA0glS8K

endpoint: http://10.10.50.16:9000

# 对于 Minio,region 需要留空,设置为 "" 即可

region: ""

部署其他文件:

git clone https://github.com/ctrox/csi-s3.git

cd csi-s3

cd deploy/kubernetes

kubectl create -f provisioner.yaml

kubectl create -f attacher.yaml

kubectl create -f csi-s3.yaml

自定义 storageclass

cat > storageclass.yaml << EOF

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: csi-s3

provisioner: ch.ctrox.csi.s3-driver

parameters:

# specify which mounter to use

# can be set to rclone, s3fs, goofys or s3backer

mounter: s3fs

# 默认 csi 针对每个 volume 都会创建一个 bucket。也可以预先在 minio 上创建好 bucket,然后在下面指定

bucket: k8s

csi.storage.k8s.io/provisioner-secret-name: csi-s3-secret

csi.storage.k8s.io/provisioner-secret-namespace: kube-system

csi.storage.k8s.io/controller-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/controller-publish-secret-namespace: kube-system

csi.storage.k8s.io/node-stage-secret-name: csi-s3-secret

csi.storage.k8s.io/node-stage-secret-namespace: kube-system

csi.storage.k8s.io/node-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/node-publish-secret-namespace: kube-system

EOF

kubectl apply -f storageclass.yaml

pvc 部署测试

cd csi-s3/deploy/kubernetes/examples

kubectl apply -f pvc.yaml

验证:

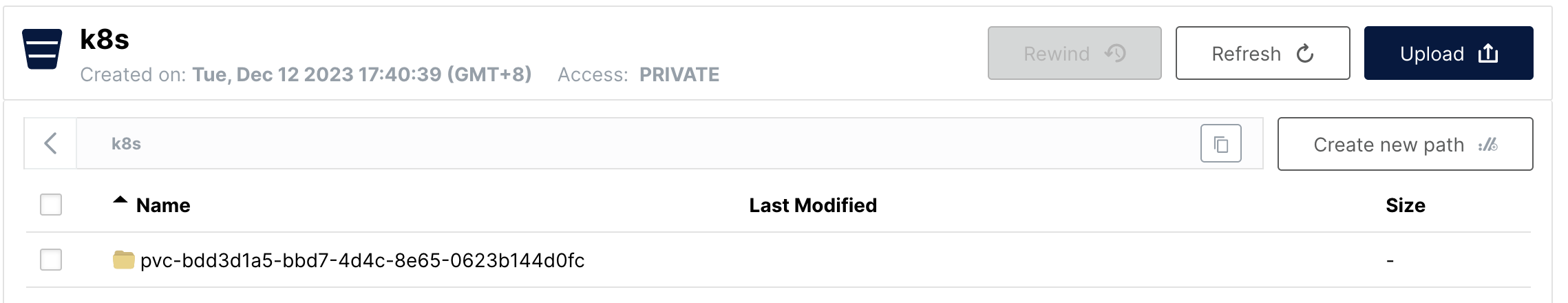

[root@k8s-m01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-s3-pvc Bound pvc-bdd3d1a5-bbd7-4d4c-8e65-0623b144d0fc 5Gi RWO csi-s3 10m

minio 上看到 pvc 目录:

两个小问题

1

测试时发现 PVC 和 PV 均创建成功,但是给 pod 挂载时报:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m26s default-scheduler Successfully assigned ruoyi/test-pod to k8s-w01

Warning FailedAttachVolume 76s attachdetach-controller AttachVolume.Attach failed for volume "pvc-87024db5-411c-4c88-b88d-cdc52be24dea" : Attach timeout for volume k8s/pvc-87024db5-411c-4c88-b88d-cdc52be24dea

Warning FailedMount 67s kubelet Unable to attach or mount volumes: unmounted volumes=[nfs-pvc], unattached volumes=[kube-api-access-7dxv4 nfs-pvc]: timed out waiting for the condition

查了下 github 上有相同的 issue:

https://github.com/ctrox/csi-s3/issues/80#issuecomment-1527451694

2

挂载成功后,目录无法写入,需要将默认 storageclass 中的 mounter 从 rclone 更改为 s3fs

touch: cannot touch '/var/lib/www/html/hello_world': Input/output error